Understanding multi-page documents and news videos is a common task in human daily life. To tackle such scenarios, Multimodal Large Language Models (MLLMs) should be equipped with the ability to understand multiple images with rich visually-situated text information. However, comprehending document images is more challenging than natural images, as it requires a more fine-grained perception to recognize all texts. Existing approaches either add a high-resolution encoder or crop high-resolution images into low-resolution sub-images, both of which have limitations.

Previous researchers have tried to solve the challenge of understanding document images using various techniques. Some works proposed adding a high-resolution encoder to better capture the fine-grained text information in document images. Others chose to crop high-resolution images into low-resolution sub-images and let the Large Language Model understand their relationship.

While these approaches have achieved promising performance, they suffer from a common issue – the large number of visual tokens required to represent a single document image. For example, the InternVL 2 model costs an average of 3k visual tokens on the single-page document understanding benchmark DocVQA. Such long visual token sequences not only result in long inference times but also occupy a significant amount of GPU memory, greatly limiting their application in scenarios that involve understanding complete documents or videos.

Researchers from Alibaba Group and Renmin University of China have proposed a robust compressing architecture called High-resolution DocCompressor. This method utilizes the visual features of a global low-resolution image as the compressing guidance (query), as the global feature map can effectively capture the overall layout information of the document.

Instead of attending to all high-resolution features, the High-resolution DocCompressor collects a group of high-resolution features with identical relative positions in the raw image as the compressing objects for each query from the global feature map. This layout-aware approach helps to better summarize the text information within a specific layout region.

In addition, the researchers argue that compressing visual features after the vision-to-text module of the Multimodal Large Language Model can better maintain the textual semantics in the document images, as this is analogous to summarizing texts in Natural Language Processing.

The DocOwl2 model utilizes a Shape-adaptive Cropping Module and a low-resolution vision encoder to encode high-resolution document images. The Shape-adaptive Cropping Module cuts the raw image into multiple low-resolution sub-images, and the low-resolution vision encoder is used to encode both the sub-images and the global image. The model then utilizes a vision-to-text module called H-Reducer to ensemble the horizontal visual features and align the dimension of the vision features with the Large Language Model. In addition to that, DocOwl2 includes a high-resolution compressor, which is the key component of the High-resolution DocCompressor. This compressor utilizes the visual features of the global low-resolution image as the query and collects a group of high-resolution features with identical relative positions in the raw image as the compressing objects for each query. This layout-aware approach helps to better summarize the text information within a specific layout region. Finally, the compressed visual tokens of multiple images or pages are concatenated with text instructions and input to a Large Language Model for multimodal understanding.

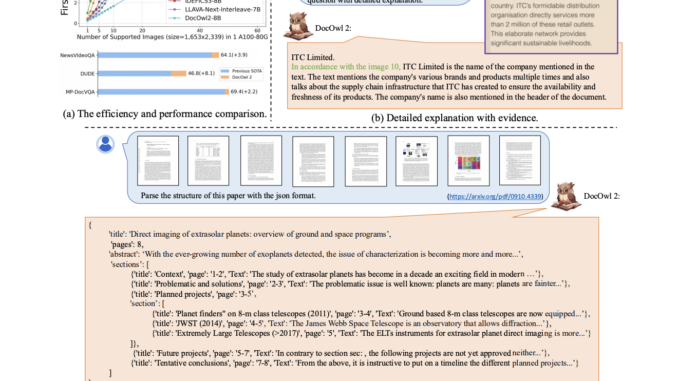

The researchers compared the DocOwl2 model with state-of-the-art Multimodal Large Language Models on 10 single-image document understanding benchmarks, 2 multi-page document understanding benchmarks, and 1 text-rich video understanding benchmark. They considered both the question-answering performance (measured by ANLS) and the First Token Latency (in seconds) to evaluate the effectiveness of their model. For the single-image document understanding task, the researchers divided the baselines into three groups: (a) models without Large Language Models as decoders, (b) Multimodal LLMs with an average of over 1,000 visual tokens per document image, and (c) Multimodal LLMs with less than 1,000 visual tokens.

The results show that although the models specifically fine-tuned on each downstream dataset performed well, the Multimodal LLMs demonstrated the potential for generalized OCR-free document understanding. Compared to other Multimodal LLMs with less than 1,000 visual tokens, the DocOwl2 model achieved better or comparable performance on the 10 benchmarks. Notably, with fewer visual tokens, DocOwl2 outperformed models like TextMonkey and TokenPacker, which also aimed to compress visual tokens, demonstrating the effectiveness of the High-resolution DocCompressor.

Also, when compared to state-of-the-art Multimodal LLMs with over 1,000 visual tokens, the DocOwl2 model achieved over 80% of their performance while using less than 20% of the visual tokens. For the multi-page document understanding and text-rich video understanding tasks, the DocOwl2 model also demonstrated superior performance and significantly lower First Token Latency compared to other Multimodal LLMs that can be fed more than 10 images under a single A100-80G GPU.

This study presents mPLUG-DocOwl2, a Multimodal Large Language Model capable of efficient OCR-free Multi-page Document Understanding. The robust High-resolution DocCompressor architecture compresses each high-resolution document image into just 324 tokens using cross-attention with global visual features as guidance. On single-image benchmarks, DocOwl2 outperforms existing compressing methods and matches state-of-the-art MLLMs while using fewer visual tokens. It also achieves OCR-free state-of-the-art performance on multi-page document and text-rich video understanding tasks with much lower latency. The researchers emphasize that using thousands of visual tokens per document page is often redundant and a waste of computational resources. They hope that DocOwl2 will bring attention to balancing efficient image representation and high-performance document understanding.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and LinkedIn. Join our Telegram Channel.

If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

Asjad is an intern consultant at Marktechpost. He is persuing B.Tech in mechanical engineering at the Indian Institute of Technology, Kharagpur. Asjad is a Machine learning and deep learning enthusiast who is always researching the applications of machine learning in healthcare.

Be the first to comment