Large Language Models (LLMs) have made significant progress in following instructions and responding to user queries. However, the current instruction tuning process faces major challenges. Acquiring human-generated data for training these models is expensive and time-consuming. Moreover, the quality of such data is limited by human capabilities. This limitation is especially evident while addressing the ‘Super Alignment’ challenge, which aims to control potentially super-intelligent AIs whose actions may exceed human comprehension. There is a need to focus on finding effective methods in the AI field to guide LLMs’ development beyond human-level performance as they continue to advance.

Researchers have explored various methods to align LLMs with human values. One popular method is Reinforcement Learning from Human Feedback (RLHF), which uses the Proximal Policy Optimization (PPO) technique to train a reward model based on human preference data. The second method, the LLM-as-a-Judge has gained popularity for evaluation and reward model training. However, these methods often rely on human data or input from stronger models, potentially limiting their effectiveness for super alignment challenges. The last approach, Super Alignment includes Constitutional AI and CriticGPT which attempt to use AI to generate feedback but still struggle with training both the actor and judge components during self-improvement.

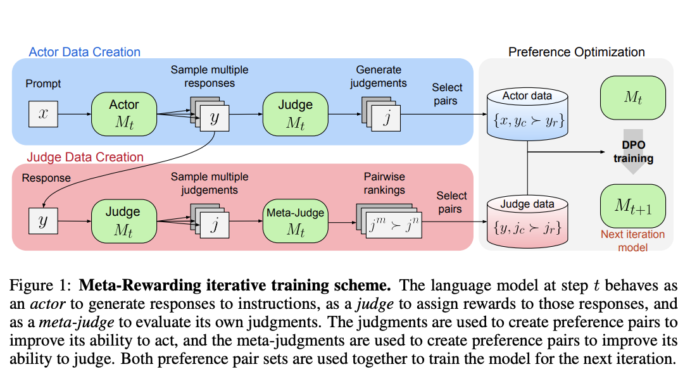

Researchers from Meta FAIR, the University of California, Berkeley, and New York University have introduced a new method called Meta-Rewarding to improve the instruction-following abilities of LLMs. This method adds a third role, the meta-judge, to the existing actor and judge roles. The meta-judge evaluates the model’s judgments using a mechanism similar to LLM-as-a-Judge, called LLM-as-a-Meta-Judge. This process helps to generate training data with preference pairs of judgments, in addition to the standard preferences between actor responses. The Meta-Rewarding enhances the overall instruction-following capability of the model by improving both acting and judging skills.

The Meta-Rewarding method is developed on the instruction-finetuned Llama-3-8B-Instruct model, which serves as the seed model. Researchers performed supervised finetuning (SFT) on the Evaluation Fine-Tuning (EFT) dataset, which includes ranked human responses from Open Assistant. This step enhances the ability of the model to act as a judge. The Meta-Rewarding iterations use 20K prompts generated by Llama-2-70B-Chat. Each iteration samples 5K prompts from this set, performing four iterations. This iterative approach enhances the model’s performance in acting and judging roles. The experimental setup of previous work is closely followed, adapting it to include the meta-judge component for self-improvement.

The results obtained on evaluating Meta-Rewarding show that the length-controlled win rate increased from 22.9% to 39.4% on AlpacaEval, outperforming even GPT-4-0314. This method also outperforms the enhanced standard Self-Rewarding training, which had a win rate of 35.5%, highlighting the importance of the meta-judge. The same performance is seen on the Arena-Hard benchmark, which tests models’ ability to handle complex questions. After four iterations, Meta-Rewarding consistently improved scores, achieving an 8.5% increase over the seed model’s 20.6% score. These results prove that Meta-Rewarding enhances LLMs’ capabilities in following instructions and answering complex questions.

In conclusion, researchers proposed Meta-Rewarding, a new method to enhance the instruction-following abilities of LLMs. This method utilizes a meta-judge to evaluate and choose judgments for optimizing preferences, which addresses the limitations of previous Self-Rewarding frameworks by directly training the judge. Moreover, it includes a novel length-control technique to address issues of length explosion during AI feedback training. The model’s judgment abilities align more closely with human judges and advanced AI judges like GPT-4. However, the researchers address a limitation in their 5-point judging system, which occasionally leads to ties due to minimal differences in response quality.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 48k+ ML SubReddit

Find Upcoming AI Webinars here

Sajjad Ansari is a final year undergraduate from IIT Kharagpur. As a Tech enthusiast, he delves into the practical applications of AI with a focus on understanding the impact of AI technologies and their real-world implications. He aims to articulate complex AI concepts in a clear and accessible manner.

Be the first to comment