Recommendation systems are essential for connecting users with relevant content, products, or services. Dense retrieval methods have been a mainstay in this field, utilizing sequence modeling to compute item and user representations. However, these methods demand substantial computational resources and storage, as they require embeddings for every item. As datasets grow, these requirements become increasingly burdensome, limiting their scalability. Generative retrieval, an emerging alternative, reduces storage needs by predicting item indices through generative models. Despite its potential, it struggles with performance issues, especially in handling cold-start items—new items with limited user interactions. The absence of a unified framework combining the strengths of these approaches highlights a gap in addressing trade-offs between computation, storage, and recommendation quality.

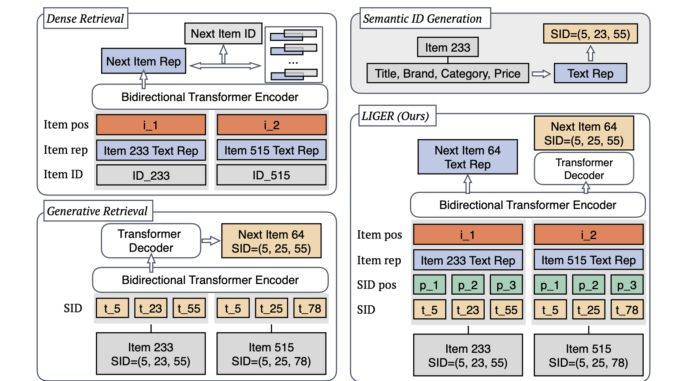

Researchers from the University of Wisconsin, Madison, ELLIS Unit, LIT AI Lab, Institute for Machine Learning, JKU Linz, Austria, and Meta AI have introduced LIGER (LeveragIng dense retrieval for GEnerative Retrieval), a hybrid retrieval model that blends the computational efficiency of generative retrieval with the precision of dense retrieval. LIGER refines a candidate set generated by generative retrieval through dense retrieval techniques, achieving a balance between efficiency and accuracy. The model leverages item representations derived from semantic IDs and text-based attributes, combining the strengths of both paradigms. By doing so, LIGER reduces storage and computational overhead while addressing performance gaps, particularly in scenarios involving cold-start items.

Technical Details and Benefits

LIGER employs a bidirectional Transformer encoder alongside a generative decoder. The dense retrieval component integrates item text representations, semantic IDs, and positional embeddings, optimized using a cosine similarity loss. The generative component uses beam search to predict semantic IDs of subsequent items based on user interaction history. This combination allows LIGER to retain generative retrieval’s efficiency while addressing its limitations with cold-start items. The model’s hybrid inference process, which first retrieves a candidate set via generative retrieval and then refines it through dense retrieval, effectively reduces computational demands while maintaining recommendation quality. Additionally, by incorporating textual representations, LIGER generalizes well to unseen items, addressing a key limitation of prior generative models.

Results and Insights

Evaluations of LIGER across benchmark datasets, including Amazon Beauty, Sports, Toys, and Steam, show consistent improvements over state-of-the-art models like TIGER and UniSRec. For example, LIGER achieved a Recall@10 score of 0.1008 for cold-start items on the Amazon Beauty dataset, compared to TIGER’s 0.0. On the Steam dataset, LIGER’s Recall@10 for cold-start items reached 0.0147, again outperforming TIGER’s 0.0. These findings demonstrate LIGER’s ability to merge generative and dense retrieval techniques effectively. Moreover, as the number of candidates retrieved by generative methods increases, LIGER narrows the performance gap with dense retrieval. This adaptability and efficiency make it suitable for diverse recommendation scenarios.

Conclusion

LIGER offers a thoughtful integration of dense and generative retrieval, addressing challenges in efficiency, scalability, and handling cold-start items. Its hybrid architecture balances computational efficiency with high-quality recommendations, making it a viable solution for modern recommendation systems. By bridging gaps in existing approaches, LIGER lays the groundwork for further exploration into hybrid retrieval models, fostering innovation in recommendation systems.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. Don’t Forget to join our 60k+ ML SubReddit.

🚨 FREE UPCOMING AI WEBINAR (JAN 15, 2025): Boost LLM Accuracy with Synthetic Data and Evaluation Intelligence–Join this webinar to gain actionable insights into boosting LLM model performance and accuracy while safeguarding data privacy.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.

Be the first to comment