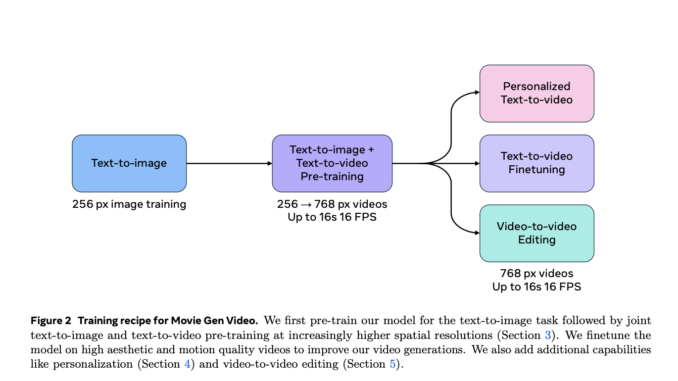

Meta AI research team has introduced MovieGen, a suite of state-of-the-art (SotA) media foundation models that are set to revolutionize how we generate and interact with media content. This super cool development encompasses innovations in text-to-video generation, video personalization, and video editing, all while supporting personalized video creation using user-provided images. At the core of MovieGen are advanced architectural designs, training methodologies, and inference techniques that enable scalable media generation like never before.

Key Features of MovieGen

High-Resolution Video Generation

One of the standout features of MovieGen is its ability to generate 16-second videos at 1080p resolution and 16 frames per second (fps), complete with synchronized audio. This is made possible by a colossal 30 billion parameter model that leverages cutting-edge latent diffusion techniques. The model excels in producing high-quality, coherent videos that align perfectly with textual prompts, opening up new horizons in content creation and storytelling.

Advanced Audio Synthesis

In addition to video generation, MovieGen introduces a 13 billion parameter model specifically designed for video/text-to-audio synthesis. This model generates 48kHz cinematic audio that is synchronized with the visual input and can handle variable lengths of media up to 30 seconds. By learning visual-audio associations, the model can create both diegetic and non-diegetic sounds and music, enhancing the realism and emotional impact of the generated media.

Versatile Audio Context Handling

The audio generation capabilities of MovieGen are further enhanced through masked audio prediction training, which allows the model to handle different audio contexts, including generation, extension, and infilling. This means that the same model can be used for a variety of audio tasks without the need for separate specialized models, making it a versatile tool for content creators.

Efficient Training and Inference

MovieGen utilizes the Flow Matching objective for efficient training and inference, combined with a Diffusion Transformer (DiT) architecture. This approach accelerates the training process and reduces computational requirements, enabling faster generation of high-quality media content.

Technical Details

Latent Diffusion with DAC-VAE

At the technical core of MovieGen’s audio capabilities is the use of Latent Diffusion with DAC-VAE. This technique encodes 48kHz audio at 25Hz, achieving higher quality at a lower frame rate compared to traditional methods like Encodec. The result is crisp, high-fidelity audio that matches the cinematic quality of the generated videos.

DAC-VAE Enhancements

The DAC-VAE model incorporates several enhancements to improve audio reconstruction at compressed rates:

Multi-scale Short-Time Fourier Transform (STFT): This allows for better capture of both temporal and frequency-domain information.

Snake Activation Functions: These help reduce artifacts and improve the periodicity of the audio signals.

Removal of Residual Vector Quantization (RVQ): By eliminating RVQ and focusing on Variational Autoencoder (VAE) training, the model achieves superior reconstruction quality.

Applications and Implications

The introduction of MovieGen marks a significant leap forward in media generation technology. By combining high-resolution video generation with advanced audio synthesis, MovieGen enables the creation of immersive and personalized media experiences. Content creators can leverage these tools for:

Text-to-Video Generation: Crafting videos directly from textual descriptions.

Video Personalization: Customizing videos using user-provided images and content.

Video Editing: Enhancing and modifying existing videos with new audio-visual elements.

These capabilities have far-reaching implications for industries such as entertainment, advertising, education, and more, where dynamic and personalized content is increasingly in demand.

Conclusion

Meta AI’s MovieGen represents a monumental advancement in the field of media generation. With its sophisticated models and innovative techniques, it sets a new standard for what is possible in automated content creation. As AI continues to evolve, tools like MovieGen will play a pivotal role in shaping the future of media, offering unprecedented opportunities for creativity and expression.

Check out the Paper and Details. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit

Interested in promoting your company, product, service, or event to over 1 Million AI developers and researchers? Let’s collaborate!

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.

Be the first to comment