Dense Retrieval (DR) models are an advanced method in information retrieval (IR) that uses deep learning techniques to map passages and queries into an embedding space. The model can determine the semantic relationships between them by comparing the embeddings of the query and the passages using this embedding space. DR models seek to strike a compromise between two crucial aspects: effectiveness, or the precision and applicability of the information recovered, and efficiency, or the speed at which the model can process and provide pertinent data.

PLMs (pre-trained language models), especially those built on the Transformer architecture, have become effective instruments for query and passage encoding in deep reinforcement learning models. Transformer-based PLMs are very good at capturing complicated semantic linkages and dependencies over lengthy text sequences because of their self-attention mechanism.

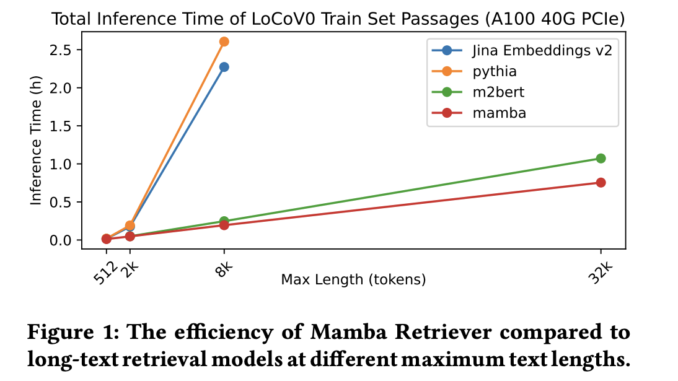

However, the computing complexity of Transformer-based PLMs is a significant disadvantage. Although strong, the computational cost of the self-attention mechanism grows quadratically with the text sequence length. This implies that the model needs a lot more time to infer pertinent information as the length of the text to be analyzed expands. When dealing with long-text retrieval tasks, tasks where the passages are lengthy and require substantial processing, this inefficiency becomes very problematic.

Recent research has created non-transformer PLMs, which seek to improve processing speed while offering comparable or even higher effectiveness to address the efficiency challenges. The architecture of Mamba is distinctive among these. MLB-based PLMs have proven they can be just as effective as Transformer-based models in generative language tasks, which are tasks that require producing text based on inputs.

Mamba PLMs show linear time scaling with respect to sequence length, in contrast to the quadratic time scaling observed in Transformer-based models. This indicates that they are significantly faster for long-text retrieval tasks since the processing time grows considerably slower as the text lengthens. The possibility of the Mamba architecture as an encoder for DR models in IR tasks has been studied in the research.

In particular, it presents the Mamba Retriever, a model intended to investigate whether Mamba can function as an encoder that is both efficient and effective. Two important datasets have been used to fine-tune the Mamba Retriever: the LoCoV0 dataset, which is designed for long-text retrieval, and the MS MARCO passage ranking dataset, which is frequently used to evaluate short-text retrieval.

The team has summarized their primary contribution as follows.

The Mamba Retriever has been created with the goal of maximizing efficiency and effectiveness in information retrieval (IR) operations. Fast processing times and excellent retrieval accuracy are balanced in this model’s architecture.

It has been studied how the Mamba Retriever’s effectiveness changes with various model sizes. The tests on the BEIR and MS MARCO passage rating datasets showed that the Mamba retriever performs better than or better than transformer-based retrievers in terms of effectiveness. The efficiency of the model rises with model size, suggesting that larger Mamba models are able to capture more intricate semantic information.

Mamba Retriever’s effectiveness has been studied, particularly when it came to long-text retrieval tasks. By using the LoCoV0 dataset, the team demonstrated that, with fine-tuning, the Mamba Retriever can handle text sequences longer than its pre-trained length, reaching effectiveness on par with or better than previous models made for long-text retrieval.

The team studied the Mamba Retriever’s inference efficiency at different passage durations. According to the findings, the Mamba Retriever excels in inference speed and has a linear time scaling advantage, which makes it especially well-suited for long-text information retrieval applications.

In conclusion, the Mamba Retriever model for information retrieval is efficient and successful, especially when it comes to long-text retrieval scenarios. It is a viable option for a variety of inference tasks due to its fast inference speed and high effectivenss, which sets it apart from more conventional Transformer-based models.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 48k+ ML SubReddit

Find Upcoming AI Webinars here

Tanya Malhotra is a final year undergrad from the University of Petroleum & Energy Studies, Dehradun, pursuing BTech in Computer Science Engineering with a specialization in Artificial Intelligence and Machine Learning.She is a Data Science enthusiast with good analytical and critical thinking, along with an ardent interest in acquiring new skills, leading groups, and managing work in an organized manner.

Be the first to comment