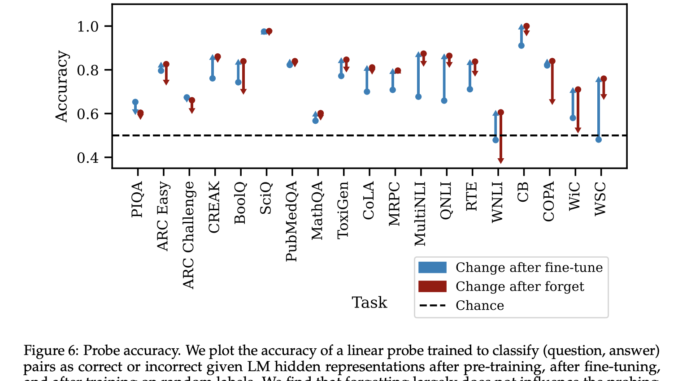

This AI Paper from MIT Explores the Complexities of Teaching Language Models to Forget: Insights from Randomized Fine-Tuning

Language models (LMs) have gained significant attention in recent years due to their remarkable capabilities. While training these models, neural sequence models are first pre-trained on a large, minimally curated web text, and then fine-tuned […]

![How To Start Mining on the Neuphoria Airdrop Bot[BEGINNERS GUIDE]](https://bitcoinpriceupdate.com/wp-content/uploads/2024/09/How-To-Start-Mining-on-the-Neuphoria-Airdrop-BotBEGINNERS-GUIDE-326x245.jpg)